Introduction

The Microsoft Power Platform offers a variety of solutions that integrate well with each other. Two services that are often combined are Power BI and Power Automate. You can do this by building flows to automate certain processes that interact with Power BI. One of these processes is to create a flow that triggers a Power BI dataset refresh. However, this comes with some limitations, as I will explain here.

The Problem

When creating a Power Automate flow, you can add a block that triggers a Power BI dataset refresh. However, once this command is sent to the Power BI App Service, the flow just continues without knowing when — and if — the refresh is completed. Depending on your dataset this could take seconds or minutes.

But in many use cases, you do want your flow to know when the refresh is completed in order to inform the user or make other blocks go from there. Before I share how I solved this, let’s first look at a documented approach.

Traditional Solution

There is actually already a method one can use to make Power BI inform the flow the dataset is refreshed. Using the Power BI API, you can directly “talk” to the dataset’s metadata and thus know when it was last refreshed. However, this solution requires you to register an Azure Active Directory (Azure AD) application in Azure.

This involves additional resources, maintenance, and costs for your solution.

As I came across this issue and was not allowed to spin up the Azure application, I had to come up with something else.

A Different Approach

So in summary, we are trying to obtain the following;

- create a Power Automate Flow

- make the flow refresh a Power BI dataset

- have some sort of notification when the refresh is complete

- do not rely on the Power BI API

In order to accomplish this, I came up with the following.

Solution Overview

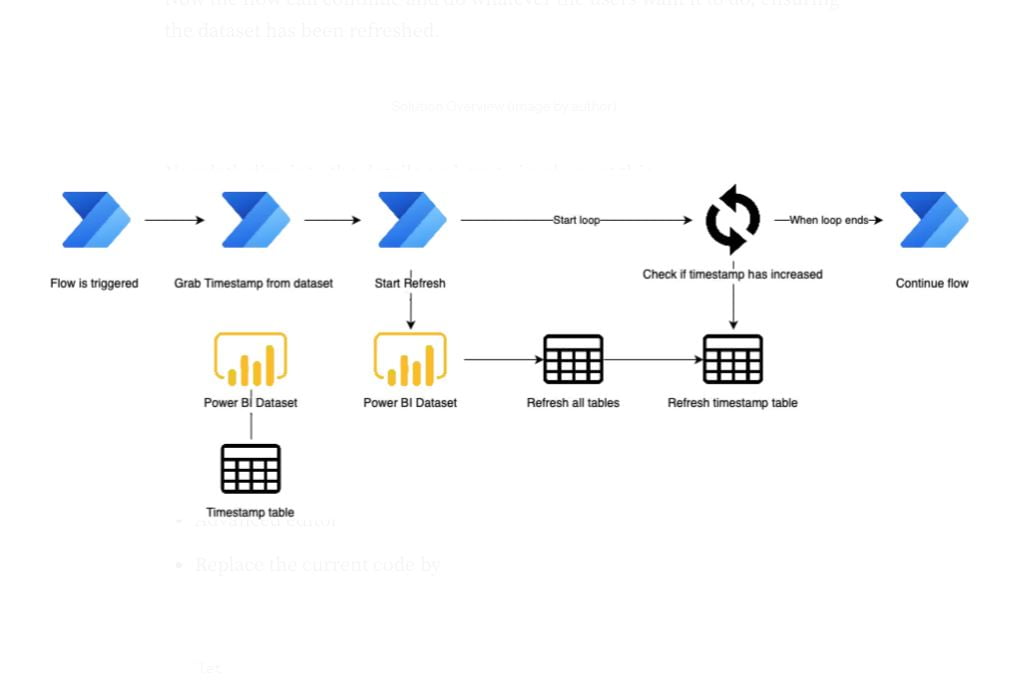

The solution is based on a timestamp that will be added to the dataset. It’s important to note that you can only implement this solution if you have write access to your dataset.

First, an extra table holding a timestamp (current time) is added to the dataset. Whenever the dataset is refreshed, the timestamp will consequently updated.

In the Power Automate flow, before the data refresh is started, it will query the dataset and pull in the value for the timestamp (which is the value when it was last refreshed). This value will be stored and then the refresh will start.

While the refresh is running, the flow will start a loop that will check for the timestamp about every 500 milliseconds. The loop will stop if the timestamp is greater (thus ‘more recent’) than the previously stored timestamp which confirms the dataset has been refreshed.

Now the flow can continue and do whatever the users want it to do, ensuring the dataset has been refreshed.

Now let’s dive into the details on how to implement this.

Step 1: Modify The Dataset

As stated earlier, you will need write access to your dataset. What we need to do here, is add an extra table to your dataset. The dataset will only contain a single value (so one column and one record), being a timestamp. You can do this by:

- Going to transform data

- Add blank query

- Advanced editor

- Replace the current code by

let

Source = Duration.TotalSeconds(DateTime.LocalNow() – #datetime(1970,1,1,0,0,0)),

Source1 = Date,

Source2 = Purchases,

Source3 = Sales,

// add more sources for each table you want to refresh

#”Converted to Table” = #table(1, {{Source}}),

#”Renamed Columns” = Table.RenameColumns(#”Converted to Table”,{{“Column1”, “RefreshTime”}}),

#”Changed Type” = Table.TransformColumnTypes(#”Renamed Columns”,{{“RefreshTime”, Int64.Type}})

in

#”Changed Type”

- Name the table: “RefreshTable”

You should add each source you want to test for refresh to this script. By doing this, the time will not be updated before those sources (tables) are refreshed first.

We will now query this information from the flow.

Step 2: Build The Flow

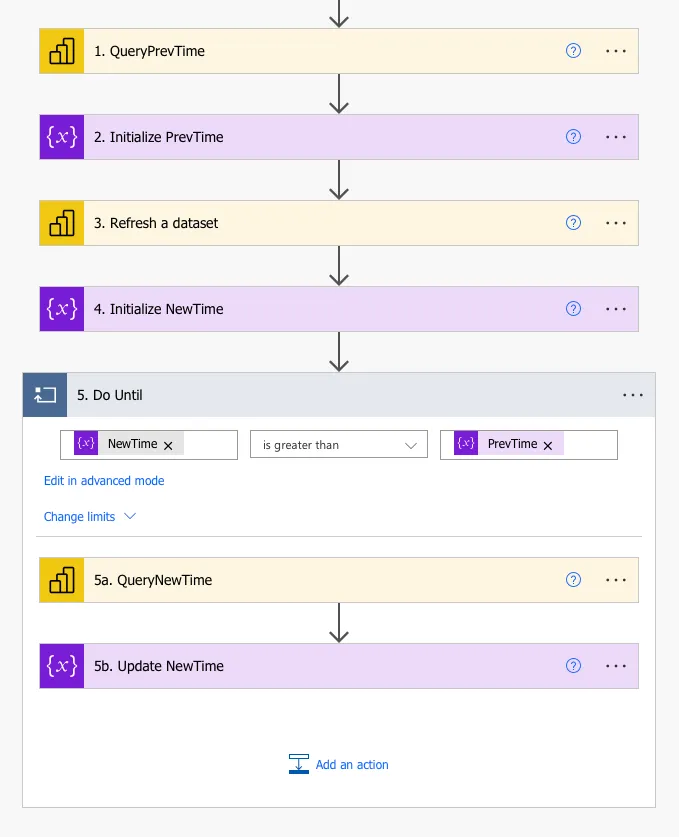

You can use whatever trigger you want for your flow. The blocks we will describe can simply be an addition to whatever flow you already have.

Block 1 – (Power BI — Run a Query against a dataset): Add a Power BI action to “Query a dataset” and add the following query:

EVALUATE(ROW(“TimeStamp”, MAX(RefreshTable[RefreshTime]))

Block 2 – (Initialize variable): Save the output from the previous block as an integer named “PrevTime”, using the following expression:

outputs(‘QueryPrevTime’)?[‘body/firstTableRows’][0][‘[TimeStamp]’]

Block 3 – (Power BI): Refresh the dataset

Block 4 – (Initialize variable): Create an (integer) variable named “NewTime”; value 0.

Block 5 – (Do Until): Create a loop that runs until: “NewTime > PrevTime”. This loop contains two embedded blocks:

Block 5a: Query the Power BI Data with the same query as Block 1.

Block 5b: Set the NewTime variable to the output of 5a (again using the expression from block 2).

The flow should now look like this:

Careful, you might have to increase the default timeout of block 5 if your dataset refresh takes longer (the default is count=60, which is very low/short).

Conclusion

In this article, we explored an innovative workaround for integrating Power BI and Power Automate to ensure a dataset is refreshed before proceeding with subsequent steps in a flow. By implementing a simple timestamp mechanism in the dataset and utilizing a looping construct in Power Automate, we created a reliable method to confirm dataset refresh completion. This solution is both cost-effective and efficient, as it bypasses the need for additional Azure Active Directory registrations and associated costs. Moreover, this approach offers a high degree of flexibility and can be adapted to various use cases, making it a valuable tool for Power Platform users who seek to streamline their data processes without incurring extra costs or complexity.

In summary, we demonstrated how a creative approach can solve practical challenges in data management and automation by leveraging the inherent capabilities of Power BI and Power Automate. This method not only enhances the efficiency of workflows but also opens new possibilities for Power Platform users to customize and optimize their data processes.

Tech Stack

In order to build this solution, you will need the following:

- A Power BI dataset

- A Power Automate Flow

Everything in this solution runs in-browser and requires no local installations.